What is this?

Implementation of AppendableData, according to design found here: DataStore over AppendableData design

Also a simple EventStore is implemented.

GitHub repo is here: GitHub - oetyng/SAFE.AppendOnlyDb: SAFENetwork AppendableData structure implemented over MutableData, as IValueAD and IStreamAD. Simple EventStore.

Bullets:

-

IStreamAD,IValueADandMutableCollection<T>implementations. -

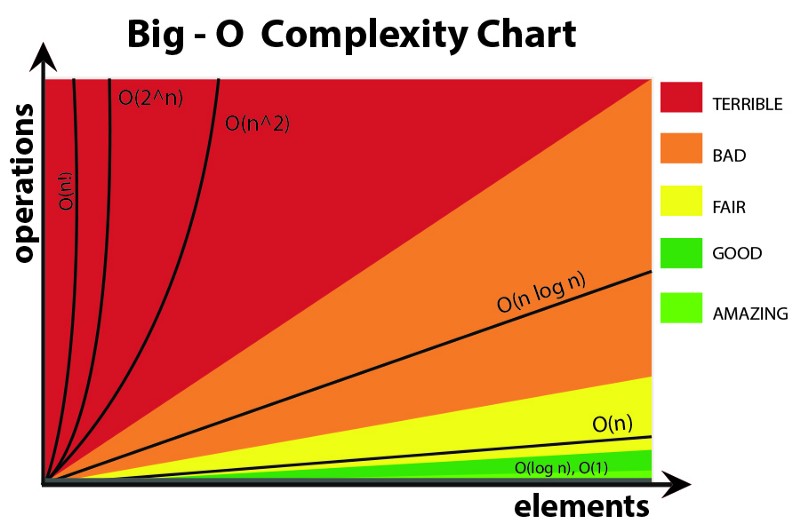

O log n retrieval of single data and O log n to O(n) on ranges from

IStreamAD. -

O log n* retrieval of value from

IValueAD(*by caching current leaf we get O(1) after first request). - Range queries on versions.

- Test coverage for confirming the functionality.

- EventStore over

AppendableData.

Additional goodies:

- Using new language features, such as

IAsyncEnumerable, from C# 8.0

Click here for comparing O log n O(1) etc.:

AppendableData

I wanted to try out ideas for how AppendableData could be designed, as to be able to build a datastore based on it.

I reused much of the logic from SAFE.DataStore, but didn’t aim at shared libraries as I wanted maximal freedom in tailoring this.

As I was working on various ideas for Code: FileSystem - Unlimited Size (working on Mock Network) - #21 by oetyng I hit a small road block with regards to Dokan. Instead I started to look at the WAL (write ahead log) like functionality I wanted to implement there. So, I went ahead with what we know about the coming changes, to try them out.

Short recap

Ordered data tree

The basic idea is the same; we have a data tree, which grows horizontally by adding nodes, and vertically by appending new heads at the root. Just like with the MD based datastore, the very first node is both the root and a leaf. The leaf level is 0. Every new head that is added, gets an incremented Level.

Vertical growth:

Horisontal growth:

New things

There are several differences though:

One is that we automatically have a strict order, by virtue of following the concept of an append only stream with monotonically incremented version. Every appended value is stored in an AD (i.e. MD under the hood) by a key which is the version number.

MutableCollection

Another difference is that we use the MutableCollection<T> class, which is a combination of a ValueAD and a StreamAD, as to emulate a mutable collection. The ValueAD is what gives us a constant reference to a collection. The collection (StreamAD) that the ValueAD current version points at, can of course never be mutated. So whenever we want to mutate (other than append), we create a new StreamAD, and add the reference to the ValueAD.

Range queries

Very similar to how range queries are performed on B+Trees (this data tree is quite similar to one, but does not need balancing); we find a node and follow its links forward or backwards on the same level. One difference is that we also do this in-order traversal on internal nodes, and not only on leaf nodes. If the data spans multiple internal nodes, it can actually split the range request, and perform traversals of the sub-ranges in parallel.

API

The API has been changed to reflect the two uses of an AD, so we have:

IValueAD

IStreamAD

Details

Streaming data

The most heavily used new feature here from C# 8.0 is IAsyncEnumerable. This has given a lot cleaner code. But it is far more than obscure developer aesthetics at play; while we before could of course have non blocking requests for collections, we would still need to wait for all of them (without custom implementations) before continuing. IAsyncEnumerable lets us yield items as they come in, effectively letting us stream out our data to and from the various points.

When running this with in-memory MockNetwork, the performance actually degraded slightly (roughly 30% - probably due to replacing Parallel.Foreach on decrypting of MD entries, with tasks). But in a real network setting, with significant network penalty, the benefits of streaming the data will shine clearly, since various steps in a process pipe can be executed in parallel (as data is passed on to next steps without waiting for all to be completed in previous step).

Snapshotting

The StreamAD supports a specific pattern for snapshotting, in which one entry in each MD is reserved for a snapshot of the 998 data entries (the remaining entry is the meta data).

By supplying a function for applying the events, this can be handled automatically as the AD is growing, every time it fills a segment (i.e. an MD).

Event sourcing

The ReadForwardFrom API supports keeping event sourced aggregates handled in a distributed fashion. Aggregates can by this API be kept synched, through requesting new events since their last known version.

This also simplifies building local projections out of network event streams.

Store any size of data

The underlying logic determines if the data is too large for an MD entry, and will produce an ImD datamap out of it, recursively until the datamap is small enough for the entry.

This is also resolved automatically when fetching the data, so the user doesn’t need to take data size into account.

Indexing

There’s the default index by version, but other than that any indexing will have to be done on side of the writing. No indexer has been implemented in this solution.

Data access

The combination of linking MDs at same level, and storing them - ordered by version - in a tree, makes access times as good as you could expect; O log n. This is because we can route requests through the tree by looking at the Level, and the range parameters.

Point querying the AppendableData (in role IStreamAD):

Range querying the AppendableData (in role IStreamAD):

Remarks

-

As the sharp eyed might have noticed, the ValueAD current value retrieval is not O(1) as specified in the design. However, O log n is the second best, so I’m not displeased, and there is a default caching of current leaf node in the DataTree implementation, which effectively gives O(1) on ValueAD data retrieval (after the first request).

-

Concurrent writes from different processes is handled by optimistic concurrency, however in-process thread safety has not yet been developed for the writes.

Nice type pattern matching in newer versions of C#, similar to rust:

-

The GetRangeAsync algorithm is currently not ordering the data as is, so we manage that by ordering at the client when the data has arrived. This can probably be fixed if thinking a little bit more.

-

It was a joy to write these tests, because most of it just worked. There were only a few minor adjustments. It’s a significant step going from what you envision and have coded, to passing tests, which is that first level of confirmation that the idea works.

(Some do a sort of TDD where you basically cannot write anything without starting with the UnitTests. I find that a bit inhibiting for creativity. You could say that it’s a small conflict between the creative mind and the satisfaction in controlled growth, expansion and confirmation of the functionality.)

End

This is a short update, but this stuff contains so much goodies that I hardly know where to begin ![]() and frankly am I bit too tired today to write it all.

and frankly am I bit too tired today to write it all.

Instead of one giant post, I might do several smaller updates which covers various details.